Redegal, Google Partner Premier agency 2025

RDG 7.00 €

The European Union has established the first specific legal framework for artificial intelligence with Regulation (EU) 2024/1689, known as the AI Act. It aims to ensure that AI is used safely, ethically, and transparently, protecting fundamental rights and preventing discrimination. This post provides a complete guide to this regulation.

Artificial intelligence is no longer a lawless territory in Europe. On June 13, 2024, Regulation (EU) 2024/1689 , the first European legal framework specifically dedicated to artificial intelligence, was adopted by the European Parliament and is known as the AI Act (European Union Regulation on Artificial Intelligence).

Below we explain how to use AI correctly in your business, in accordance with the rules and the Artificial Intelligence Regulation of the European Union.

The Artificial Intelligence Regulation applies to all companies, organisations, and developers that create, integrate, or use AI systems within the EU. Even companies outside Europe may be subject to it if they sell or use AI in the European market. If you work in digital marketing, technology, product development, or compliance, it’s important to understand and comply with these new rules of the Artificial Intelligence Regulation.

The main objective of the EU Regulation is to ensure that AI technologies and platforms respect human rights, prevent discrimination, protect our personal data, and are implemented transparently and responsibly in the market. This regulation was created to balance technological innovation with the protection of fundamental rights . It does not aim to halt the advancement of AI, but rather to ensure that this advancement is carried out with legal certainty and ethical considerations.

In addition to this central objective, which seeks to create trustworthy AI in Europe, the EU Regulation also defines other specific objectives , among which the following stand out:

Regulation (EU) 2024/1689 entered into force across Europe on August 1, 2024 , and has been applicable since that date. It establishes the legal framework for the use and development of AI in the European Union, defining risk assessment criteria, obligations for providers and users, and penalties for non-compliance. The EU Regulation on Artificial Intelligence focuses primarily on situations where AI may interfere with human rights and fundamental freedoms, such as surveillance, employment, education, health, or justice.

This EU regulation also aims to prevent potentially abusive AI practices , such as behaviour manipulation, discrimination, or the use of AI without human oversight. For businesses, the regulation establishes clear and predictable limits to ensure that technological innovation develops ethically and legally . It primarily applies to companies that develop, implement, or use AI systems, such as automated recruitment tools, chatbots, customer data analytics, targeted advertising, or algorithm-based quality control.

Despite its broad scope, the regulation includes some exemptions. It does not apply to cases where artificial intelligence is used solely for personal purposes , nor to AI systems and models intended exclusively for scientific research and development.

To understand the framework of the Artificial Intelligence Regulation, it is helpful to first understand how these systems work in practice. According to the European Parliament’s official website , AI systems are capable of perceiving their environment, interpreting that information, and making goal-oriented decisions . In many cases, they can adapt their behavior by analyzing the effects of their previous actions, acting with varying levels of autonomy. These capabilities allow AI to be used in applications such as web search, content recommendation, fraud detection, and digital assistance.

The EU believes that the greater the influence of AI on decisions that affect real life, employment, credit, health or justice, the greater the level of control and transparency should be.

There are also several types of artificial intelligence, ranging from simple recommendation systems (like Spotify playlists) to generative models that create images, text, or audio. Today, when most people think of AI, they tend to associate it primarily with generative AI, as it’s the most visible and accessible face of this technology, but there is more than one type of AI. Some of the main types of artificial intelligence are:

For example: ChatGPT, Gemini , DALL·E.

It is worth noting that the Artificial Intelligence Regulation strengthens the protection of human rights, ensuring that the use of AI does not jeopardize privacy, equality, or human control over automated decisions.

Generative AI is a type of artificial intelligence capable of creating new and original content , such as text, images, code, music, or designs. It is based on deep learning models that analyze large volumes of data and generate creative results in response to natural language instructions.

For its part, integrated AI , according to Google itself , refers to AI models built directly into the device or browser , such as Gemini Nano. This AI doesn’t focus on creating entirely new content, but rather on executing specific functions quickly, privately, and efficiently, such as summarizing text, translating, assisting with writing, or improving the user experience. By operating locally, it offers advantages such as greater privacy, lower latency, and the ability to function even with limited connectivity.

EIn summary:

Both can coexist, but they serve different purposes: one is geared towards creativity and the production of new content, while the other focuses on improving device functionalities and offering AI tools directly in the browser or in applications.

The Artificial Intelligence Regulation does not apply the same requirements to all systems. Instead, the European Union has created a classification model based on the level of risk that AI poses to people and society.

The EU Regulation on Artificial Intelligence establishes four risk categories for AI systems:

It includes AI systems considered a direct threat to fundamental rights and the security of individuals . Examples such as mass biometric surveillance, social scoring systems, cognitive manipulation of vulnerable groups, and the indiscriminate extraction of data for facial recognition databases are prohibited in the EU. These are cases in which AI could control behavior, exploit vulnerabilities, or discriminate against citizens.

This includes AI applications that operate in sensitive areas and can significantly impact people’s lives, such as health, justice, credit, education, employment, and public services. These systems can only be used if they meet rigorous requirements: mandatory human oversight, comprehensive technical documentation, audits, decision logging, bias mitigation, and demonstrated robustness before being released to the market.

This refers to uses of AI that do not directly interfere with rights or access to essential services, but which require transparency, such as commercial chatbots or AI-generated content . In these cases, it is mandatory to clearly inform the user that they are interacting with a machine and to identify artificial content, such as deepfakes or texts intended to inform the public.

It covers most AI systems currently in use in the EU, such as spam filters, movie recommendations, and AI in video games . These do not have a significant impact on rights or security, so they can be used without additional legal requirements. The aim is to avoid hindering innovation where the risk is virtually nonexistent.

Companies that develop, integrate, or use AI systems within the EU now have clear legal obligations. The Artificial Intelligence Regulation stipulates that those who create or use AI with a real impact on human decisions must guarantee transparency, security, oversight, and respect for fundamental rights.

According to Chapter III (Articles 8 to 15) of the AI Regulation, some of the main obligations (applicable only to AI systems classified as “high risk” ) include:

The impact of the EU Regulation on Artificial Intelligence varies depending on the type of business and its level of technological dependence. Startups, agencies, SaaS platforms, e-commerce businesses, and companies with advanced automation will need to adapt their processes . This is not just a legal matter, but also involves compliance costs and operational changes.

According to the Impact Assessment accompanying the proposed European AI Regulation, the main impacts include:

These European Union regulations also create a new competitive dynamic: those who anticipate and comply now will be prepared to operate in the European market with legal certainty. Those who ignore the regulations risk fines, loss of market share, or even a ban on using certain systems.

The EU Regulation on Artificial Intelligence establishes clear rules to ensure that working professionally with AI is safe, ethical, and transparent. It does not stifle innovation, but sets limits based on the risks it poses to people.

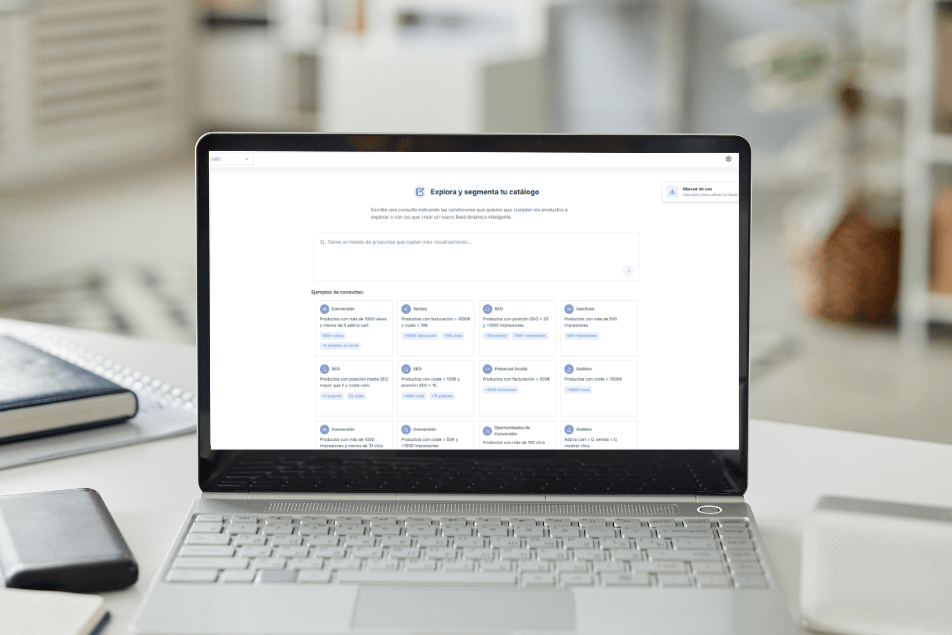

If you want to work with AI in your business, at Redegal we offer comprehensive SEO + AI services , analytics , and more. We also have our own tools to boost your e-commerce, such as Binnacle Data and Boostic.cloud , which can help you implement AI strategies securely, in compliance with European regulations, and focused on results. Let’s talk?

You may be interested in our latest posts

Redegal, Google Partner Premier agency 2025

Intelligent Dynamic Feeds: how to analyse your catalogue with AI in seconds using Boostic.cloud

Follow Google’s Universal Commerce Protocol (UCP) to increase your sales

What is lead nurturing and how to do it?

Discover the best digital strategies for your brand

Hi!

We are looking forward to hearing more about your digital business.

Tell us... What do you need?

Fill in the form or call us at (+44) 2037691249